Improve the Content Based Retrieval Systems by using the Relevance feedback paradigm

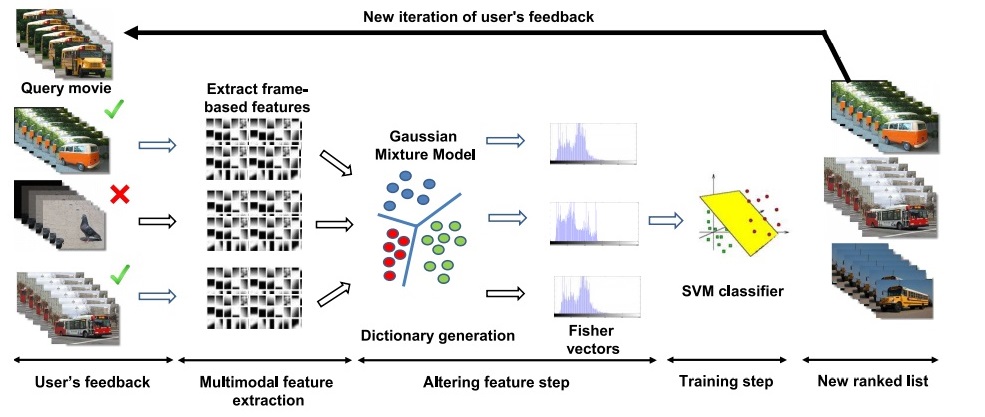

- Relevance Feedback using the Fisher kernel framework (RFFK);

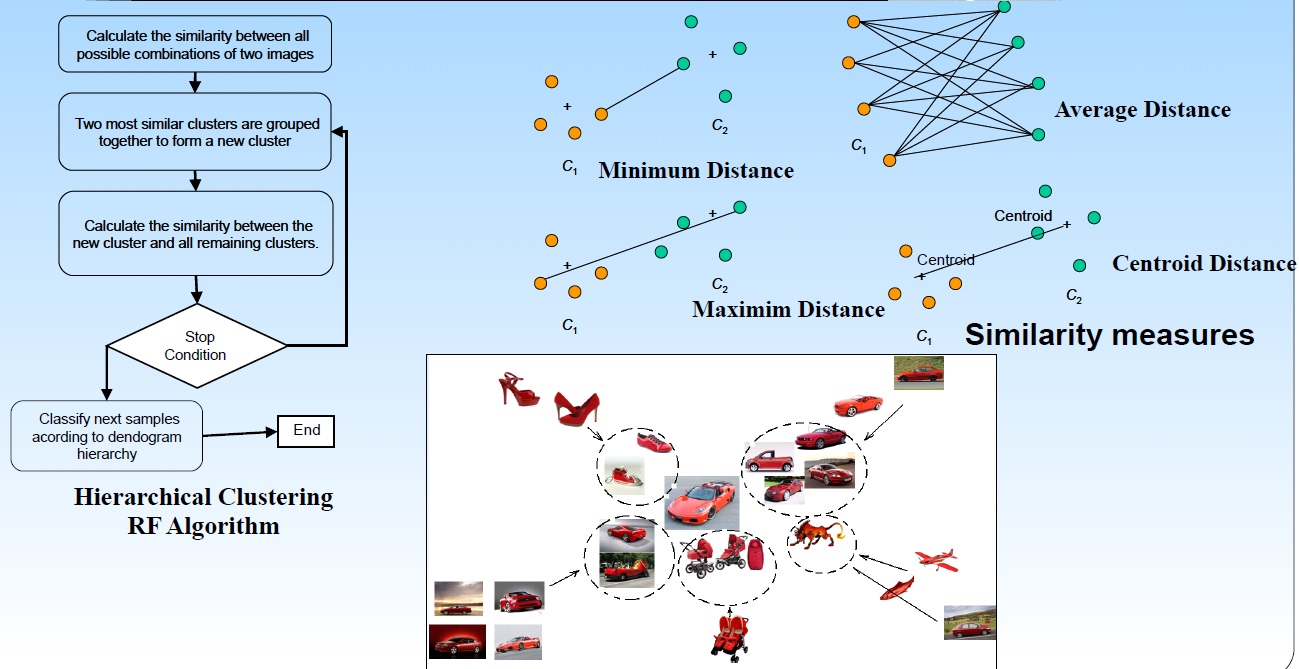

- Hierarchical Clustering Relevance Feedback for Content-Based Image Retrieval;

- Ionuţ Mironică, Bogdan Ionescu, Jasper Uijlings, Nicu Sebe, "Fisher Kernel Temporal Variation-based Relevance Feedback for Video Retrieval", Computer Vision and Image Understanding (CVIU), accepted paper 2015 (40 pages, ISI Impact Factor 1.56 - DOI:10.1016/j.cviu.2015.10.005). download draft PDF

- Ionuţ Mironică, Bogdan Ionescu, Constantin Vertan, "The Influence of the Similarity Measure to Relevance Feedback", 20th European Signal Processing Conference - EUSIPCO 2012, August 27-31, Bucharest, Romania, 2012 download draft PDF

- Bogdan Ionescu, Klaus Seyerlehner, Ionuţ Mironică, Constantin Vertan, Patrick Lambert, "Automatic Web Video Categorization using Audio-Visual Information and Hierarchical Clustering Relevance Feedback", 20th European Signal Processing Conference - EUSIPCO 2012, August 27-31, Bucharest, Romania, 2012 download draft PDF

- Ionuţ Mironică, Bogdan Ionescu, Constantin Vertan, "Hierarchical Clustering Relevance Feedback for Content-Based Image Retrieval", IEEE/ACM 10th International Workshop on Content-Based Multimedia Indexing, 27-29 June, Annecy, France, 2012 download draft PDF

- B. Ionescu, K. Seyerlehner, Ionuţ Mironică, C. Vertan, P. Lambert, "An Audio-Visual Approach to Web Video Categorization", Multimedia Tools and Applications, special issue on "Multimedia on the Web", 2012 (ISI Impact Factor 1.3). (download draft PDF - link to Springer article)

- Bogdan Boteanu, Ionuţ Mironică, Bogdan Ionescu, "A Relevance Feedback Perspective to Image Search Result Diversification", IEEE International Conference on Intelligent Computer Communication and Processing, September 4-6, Cluj-Napoca, Romania, 2014. download draft PDF.

|

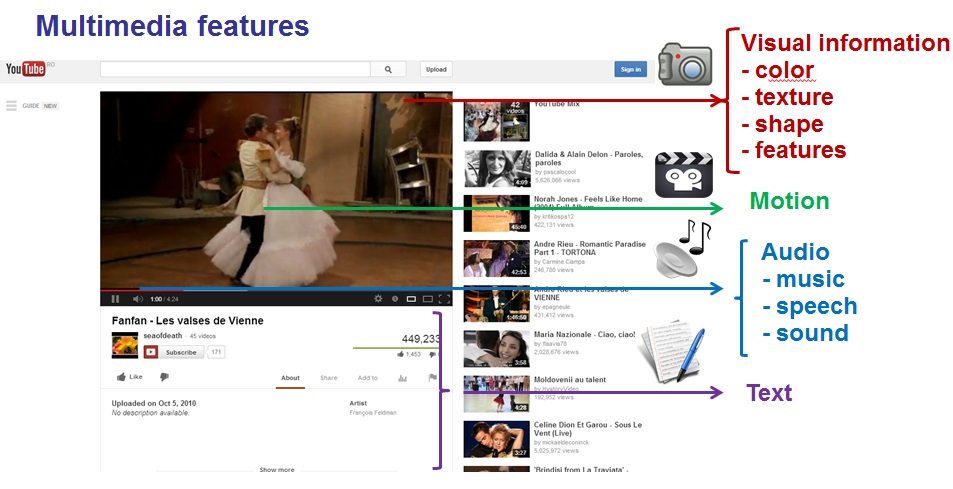

Despite the high variability of content descriptors used

(color, shape, texture, features, motion, audio, text and metadatas) and of the

classification techniques, Content Based Multimedia Retrieval (CBMR) systems are inherently limited

by the gap between the real world and its representation

through computer vision techniques. On one hand, we have

a sensor gap, i.e. the discrepancy between the real world

and its projection captured by imagining devices. On the

other hand, there is a semantic gap between the knowledge

automatically extracted from the recorded data and its semantic

meaning (e.g. yellow pears have their own color and

shape properties, but similar properties can be shared by

other objects like a yellow car).

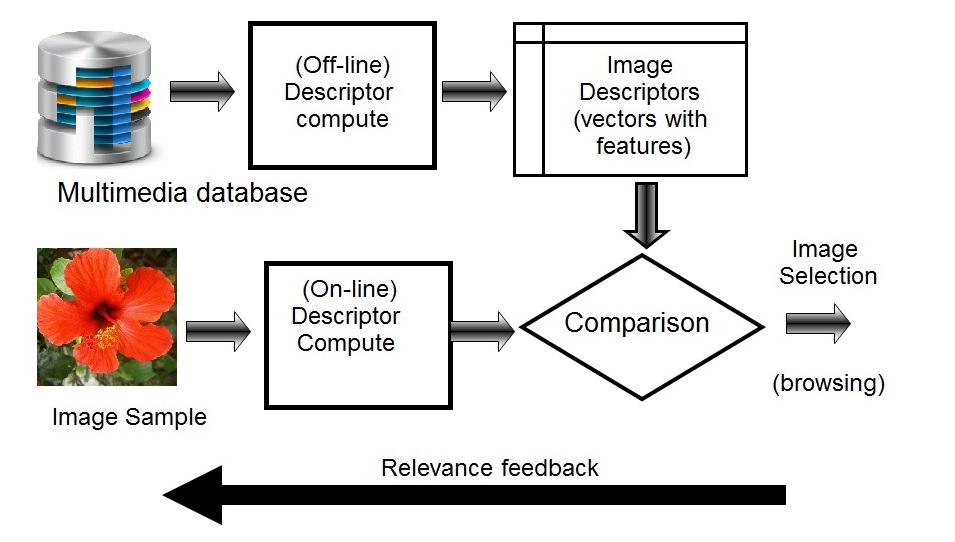

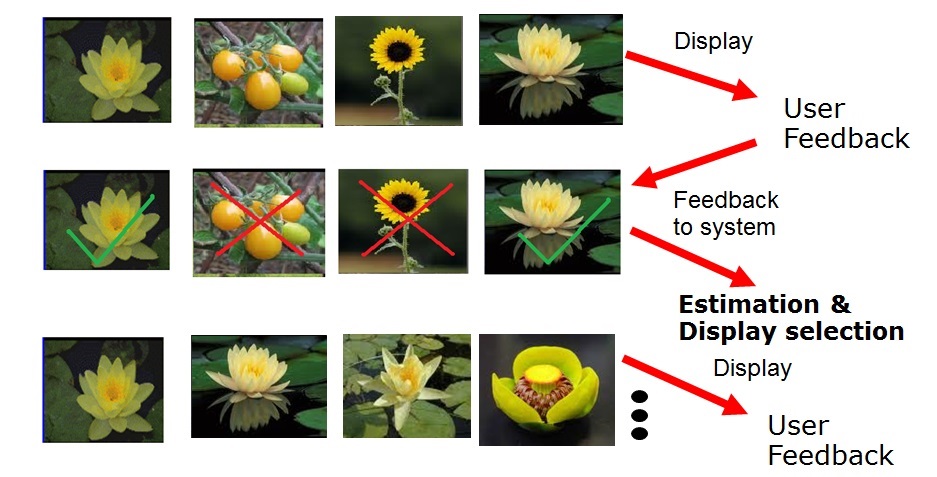

Current development of content-based multimedia retrieval systems focuses on overcoming these paradigms and narrow the gap between low-level features and high-level semantic concepts. One of the adopted solutions was to take advantage directly of the human expertise in the retrieval process, which is known to as Relevance Feedback or RF. A general RF scenario can be formulated thus: for a certain retrieval query, user gives his opinion by marking the results as relevant or non-relevant. Then the system automatically computes a better representation of the information needed based on this information and retrieval is further refined. Relevance feedback can go through one or more iterations of this sort. This basically improves the system response based on query related ground-truth. During my research, I have developed various algorithms that improve the indexing performance by using relevance feedback: |

|

Content based Video Classification

- Ionuţ Mironică, Ionuţ Duţă, Bogdan Ionescu, Nicu Sebe, "A Modified Vector of Locally Aggregated Descriptors Approach for Fast Video Classification", Multimedia Tools and Applications (MTAP), (31 pages, ISI Impact Factor 1.3 - DOI:10.1007/s11042-015-2819-7). download draft PDF

- Ionuţ Mironică, Ionuţ Duţa, Bogdan Ionescu, Nicu Sebe, "Beyond Bag-of-Words: Fast Video Classification with Fisher Kernel Vector of Locally Aggregated Descriptors", IEEE International Conference on Multimedia and Expo - ICME, 29 June - 3 July, Torino, Italy, 2015 download draft PDF.

- Ionuţ Mironică, Jasper Uijlings, Negar Rostamzadeh, Bogdan Ionescu, Nicu Sebe, "Time Matters! Capturing Variation in Time in Video using Fisher Kernels", ACM Multimedia, 21-25 October, Barcelona, Spain, 2013. download draft PDF & download poster.

- Negar Rostamzadeh, Gloria Zen, Ionuţ Mironică, Jasper Uijlings, Nicu Sebe, "Daily Living Activities Recognition via Efficient High and Low Level Cues Combination and Fisher Kernel Representation", IEEE International Conference on Image Analysis and Processing, ICIAP, Napoli, Italy, September, 2013. download draft PDF.

- Ionuţ Mironică, B. Ionescu, P. Knees, P. Lambert, "An In-Depth Evaluation of Multimodal Video Genre Categorization", IEEE International Workshop on Content-Based Multimedia Indexing, 17-19 June, Veszprém, Hungary, 2013 download draft PDF download presentation.

- Ionuţ Mironică, Bogdan Ionescu, C. Rasche, Patrick Lambert, "A Visual-Based Late-Fusion Framework for Video Genre Classification", IEEE ISSCS -International Symposium on Signals, Circuits and Systems, July 11-12, Iasi, Romania, 2013 (4 pages) download draft PDF.

- Ionuţ Mironică, Cătălin Mitrea, Bogdan Ionescu, Patrick Lambert, "A Fisher Kernel Approach for Multiple Instance Based Object Retrieval in Video Surveillance", Advances in Electrical and Computer Engineering (AECE), (10 pages, ISI Impact Factor 0.6). download draft PDF

- Cătălin Mitrea, Ionuţ Mironică, Bogdan Ionescu, Radu Dogaru, "Fast Support Vector Classifier for Automated Content-based Search in Video Surveillance", IEEE ISSCS -International Symposium on Signals, Circuits and Systems, July 9-10, Iasi, Romania, 2015 (4 pages) download draft PDF.

- Cătălin Mitrea, Ionuţ Mironică, B. Ionescu, R. Dogaru, "Multiple Instance – based Object Retrieval in Video Surveillance: Dataset and Evaluation", IEEE International Conference on Intelligent Computer Communication and Processing, September 4-6, Cluj-Napoca, Romania, 2014. download draft PDF.

- Bogdan Boteanu, Ionuţ Mironică, Bogdan Ionescu, "A Relevance Feedback Perspective to Image Search Result Diversification", IEEE International Conference on Intelligent Computer Communication and Processing, September 4-6, Cluj-Napoca, Romania, 2014. download draft PDF.

|

Along with the advances in multimedia and Internet technology, a huge amount

of data, including digital video and audio, are generated on daily basis. This

makes video in particular one of the most challenging data to process. Video

processing and analysis has been the subject of a vast amount of research in

the information retrieval literature. Until recently, the best video search

approaches were mostly restricted to text-based solutions which process keyword

queries against text tokens associated with the video, such as speech

transcripts, closed captions, social data, and so on. Their main drawback is in

the limited automation because they require human input. The use of other

modalities, such as visual and audio has been shown to improve the retrieval

performance, attempting to bridge further the inherent gap between the

real world data and its computer representation. The target is to allow automatic

descriptors to reach a higher semantic level of description, similar to the

one provided by manually obtained text descriptors.

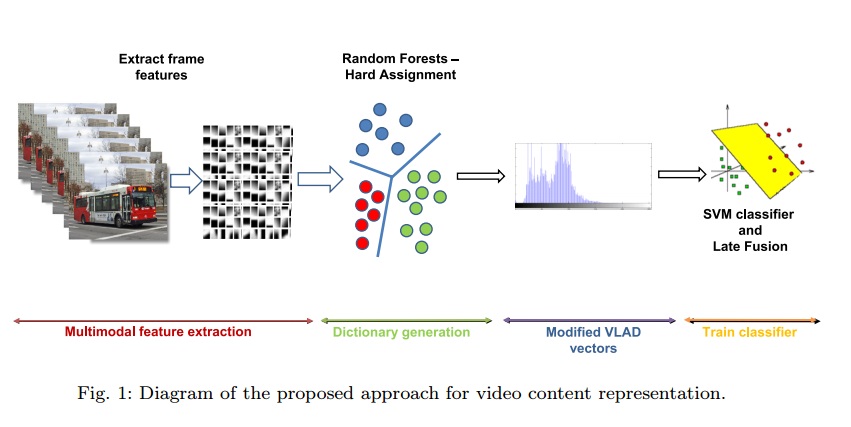

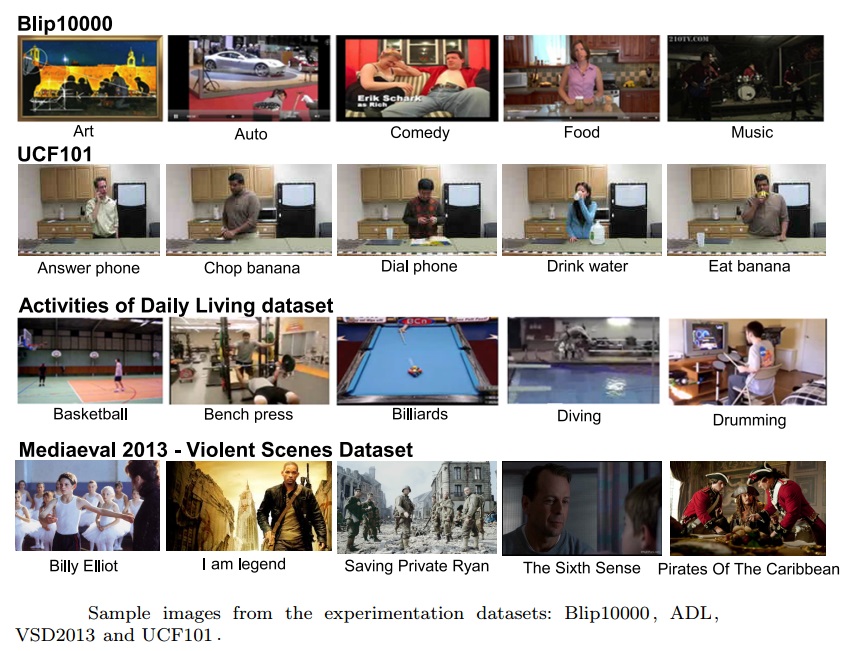

Existing state-of-the-art algorithms for video classification can achieve promising performance in benchmarking for many research challenges, starting from genre classification to event and human activity recognition. Even if these methods are designed to solve a single application, they can be adapted to a broad category of video classification tasks. A weak point of many video processing approaches is in the content description and training frameworks, which stand as a basis for any higher level processing steps. For instance, deep learning techniques come with very high complexity which translates into significant processing time for optimizing the complex architectures of the networks. Also, video information is temporal data and one of its definitive information is given by the changing/moving content. There are a number of approaches that attempt in particular to capture that temporal information, e.g., local motion features, dense trajectories, spatio-temporal volumes [6] to provide better representative power. However, these approaches generate a large amount of data which may trigger a high computational complexity for large-scale video datasets. In order to reduce this amount of information, one lead is to exploit frame-based features, where each global feature captures information of a single video frame. In this context, I proposed several new video content description frameworks that is general enough to address a broad category of video classification problems while remaining computational efficient. It combines the fast representation provided by Random Forests and VLAD framework with the high accuracy and the ability of Fisher Kernels to capture temporal variations. Relevant papers |

|

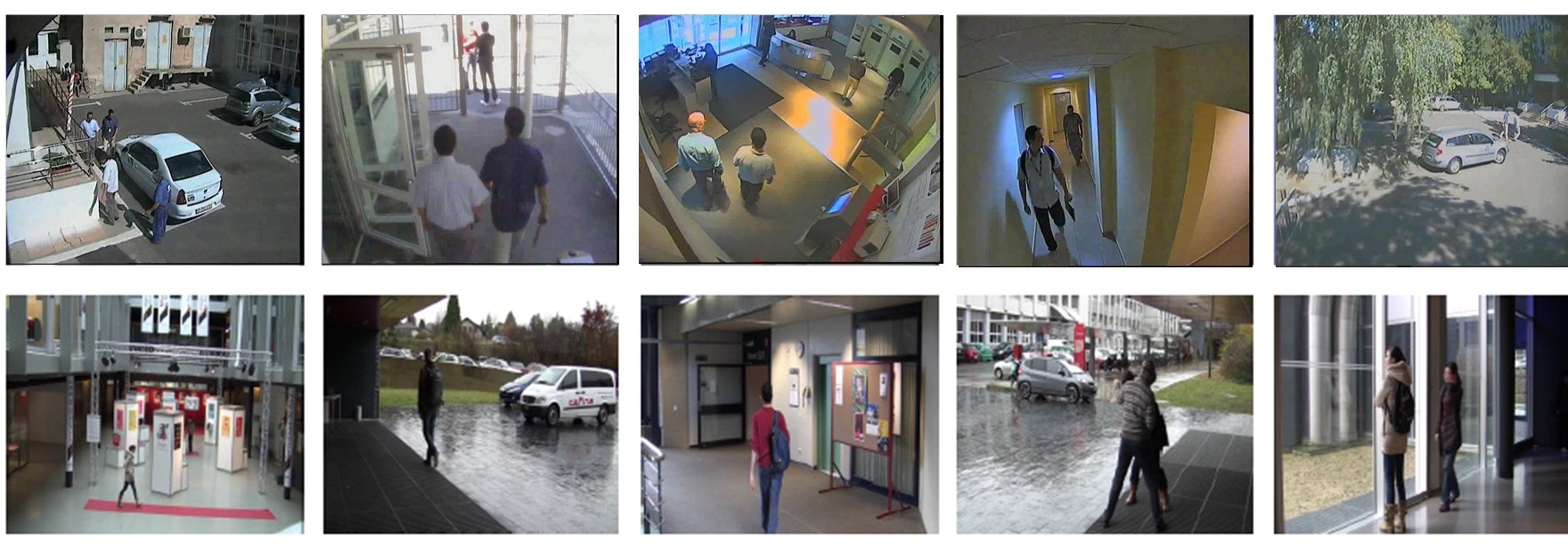

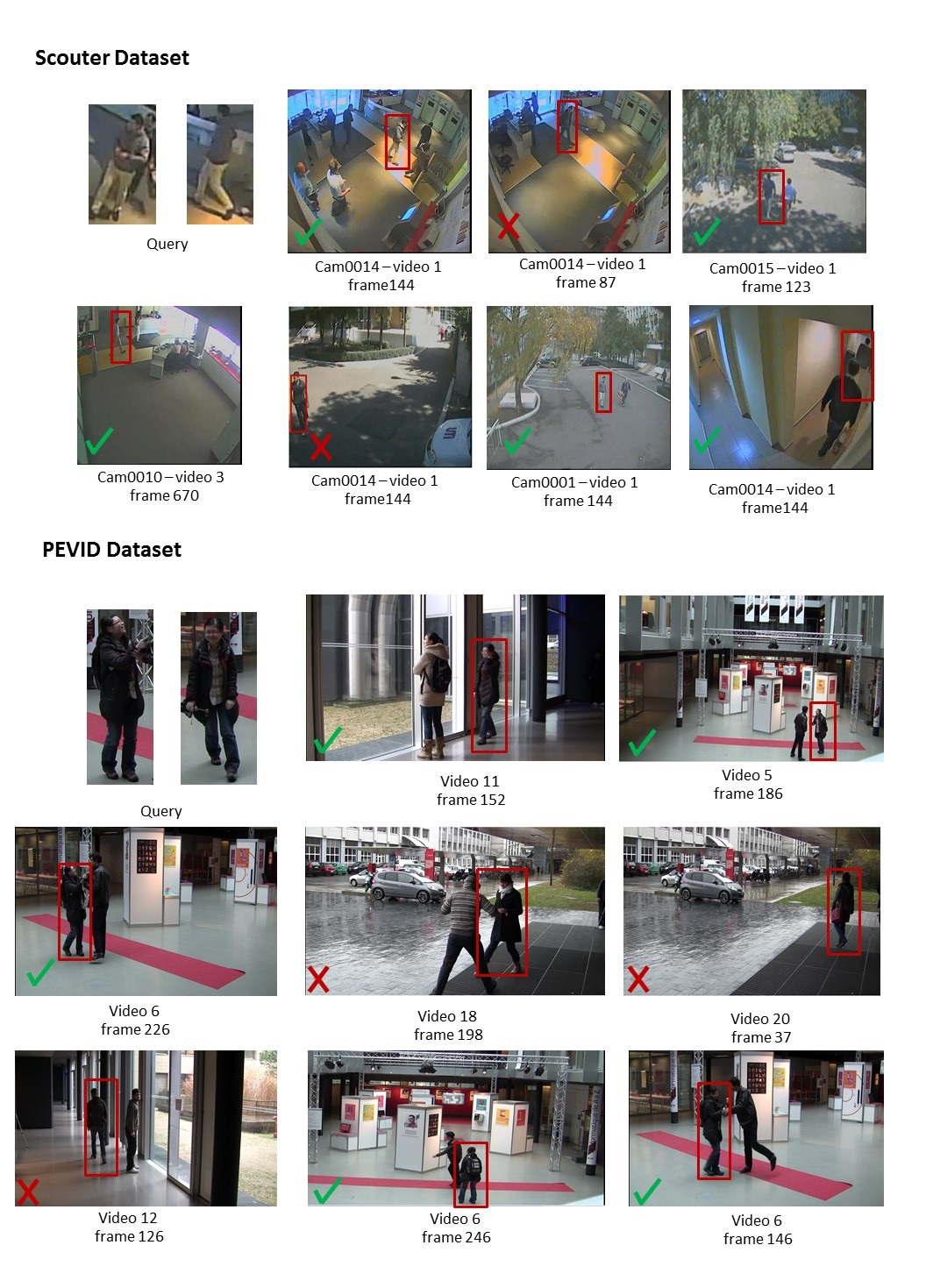

Automatic video surveillance

|

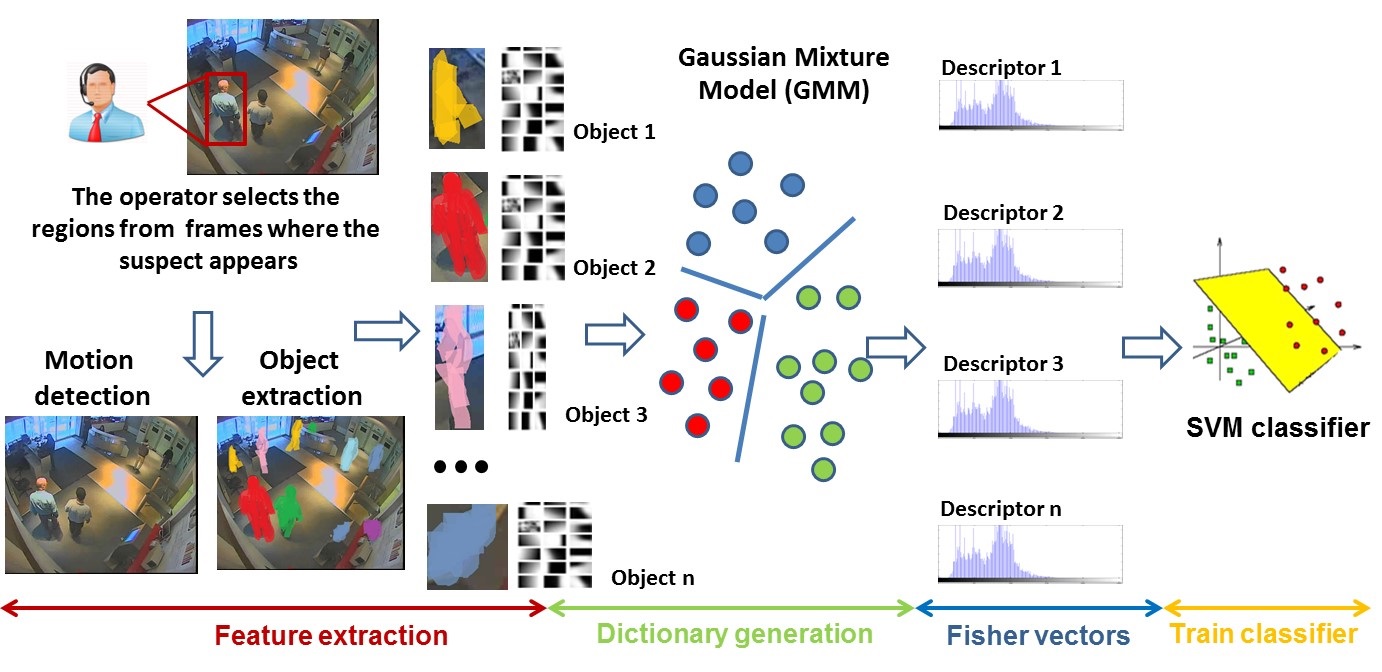

Traditional passive video surveillance has two main drawbacks: (1) finding available human resources to observe the

output is expensive and; (2) manual systems are ineffective when the number of cameras exceeds the ability of human

operators to keep track of the evolving scene.

Currently, video surveillance systems are mostly passive. They require a human operator to monitor the video feeds

on a screen, and to alert security crews when their assistance is required in case of emergency. In order to

remove these drawbacks, over the recent years there have been extensive research activities in proposing new ideas,

solutions and systems for robust automated surveillance systems.

In general, all existing approaches rely on efficient content description of the video information as an intermediate

step, namely: color and texture, shape, audio and feature points.

During this project it was developed an automated surveillance system that exploits the Fisher Kernel representation in the context of multiple-instance object retrieval task. The proposed algorithm has the main purpose of tracking a list of persons in several video sources, using only few training examples. In the first step, the Fisher Kernel representation describes a set of features as the derivative with respect to the log-likelihood of the generative probability distribution that models the feature distribution. Then, we learn the generative probability distribution over all features extracted from a reduced set of relevant frames. The proposed approach shows significant improvements and we demonstrate that Fisher kernels are well suited for this task. We demonstrate the generality of our approach in terms of features by conducting an extensive evaluation with a broad range of keypoints features. Also, we evaluate our method on two standard video surveillance datasets attaining superior results comparing to state-of-the-art object recognition algorithms. Relevant papers |

|